Hi,

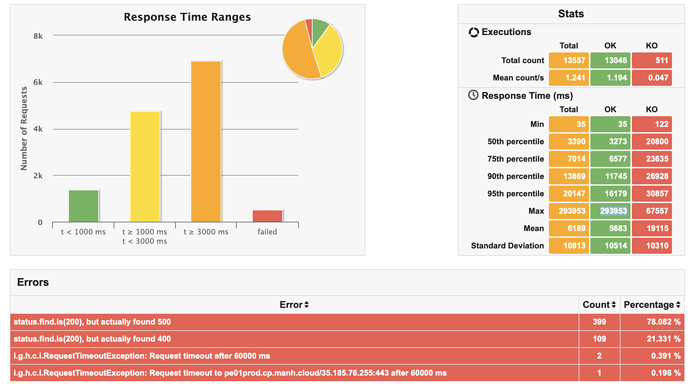

I have a question regarding response time calculation. Some of the values I see in the generated report is way too high. Much higher than the application set time out of 1m.

The simulation includes post APIs.

I’m trying to figure out what delay might be getting added in the actual response time or in which possible case can lead to this.

Hi,

Sorry, but there’s really no wait to tell if you don’t provide a reproducer as described here.

Maybe you’re running low on CPU. Maybe you have an anti-virus.

Sure, will try.

We are Using Gradle based gatling container on a gcloud jumpbox where the overall cpu util remain below 50%. the container has no limit set on cpu/memory.

It’s just an API being called and parsed in a loop. was a little confused on how the response time is being calculated. we don’t see this issue with our Java based in-house utility running on the same machine.

With Gatling we were facing another issue where the execution will get stuck every 3-5 minutes. container stats also showed no high consumption. tried going through the httpconfig. finally distributing the load across two containers on the same host somehow worked.

I suspect you’re not allocating enough cores. Gatling needs at least 4 to work properly. Remember that at a given time, you can only have 1 thread per core actually running.

Thanks for the input.

One of the Linux boxes is a 12 vCPU with 2 threads/core and the others are 8 CPUs.

I once did a mini load run locally - 6 core Mac and it was the same.

I’ll see if a test with a simple script having single request shows the same behaviour then get back to you.

Thank you

Hi,

I upgraded the gradle plugin to id 'io.gatling.gradle version 3.7.6.3.

and referred to the available scala demo project for gatling.conf.

The mean req/s has drastically reduced to 12 from 90 (for the same setup and load).

Before the simulation starts I see an error message “OpenSSL is enabled in the Gatling configuration but it’s not available on your architecture”.

Since I don’t understand what exactly caused the throughput to reduce for time being the only option I have is to switch back to the old setup.

Meanwhile would appreciate any inputs from your side.

Thank you

Sorry, but I can’t play riddles. There’s definitely something wrong with your set up. 90 rps is very low for Gatling, all the more 12 rps. And such drop is completely unheard of.

OpenSSL is enabled in the Gatling configuration but it’s not available on your architecture

Gatling 3.7.6 ships BoringSSL binaries for linux-aarch_64, linux-x86_64, osx-aarch_64, osx-x86_64, windows-x86_64 so unless you’re running on some very weird architecture such as FreeBSD or Windows 32bit, you probably have corrupted or missing jars.

I really can’t help unless you provide a reproducer.

1 Like

Reporting issue not reproducible - The report with high calculated response time (which is not evident from the application logs) is not reproducible with low load and is only hits the issue with the actual load scenario.

Before we close this want to highlight/reiterate a couple of points.

-

Able to achieve target rps : thread.sleep in my datafetch retry function was somehow working in the old plugin but with the upgrade it was causing delays. removed it and changed the approach to use gatling pause with exec and the rps is back to normal.

-

100 rps is expected : it’s a 400 to 750 users over 3hrs load, with RT of 5 to 10 seconds so having mean cnt/sec of 100 is okay.

-

openssl : the gradle plugin downloaded jars include ‘Gradle: io.netty:netty-tcnative-boringssl-static:2.0.50.Final’ it still throws the open ssl message in the beginning. on both local mac and gcloud debian linux vm.

Main concern : Response Time values.

In this recent report metrics look fine until you read the Max. values/ %le for KOs.

cloud host util. during load test is normal

Thank you

Thread.sleep

That’s your issue.

Never ever do that in code executed by Gatling’s own threads, typically in functions passed to the Gatling DSL. Period.

openssl : the gradle plugin downloaded jars include ‘Gradle: io.netty:netty-tcnative-boringssl-static:2.0.50.Final’ it still throws the open ssl message in the beginning.

Investigating

1 Like

openssl : the gradle plugin downloaded jars include ‘Gradle: io.netty:netty-tcnative-boringssl-static:2.0.50.Final’ it still throws the open ssl message in the beginning.

This one is a gradle issue with transitive dependencies pulling artifacts with a classifier. In the next release, we’re going to pull them explicitly.

1 Like

Hi, I hope you’re doing well.

Upgraded to 3.8 and the Gradle issue is resolved.

coming back to the inaccuracy in the generated report wanted to point out a thing.

The high response time (particularly max and percentile values) seems to be just the miscalculation rather addition of any client-side delay.

The values under the stats/RT %le over ok section are different from the ones displayed by response time distribution graph.

when comparing the report with the one generated by internal load simulator utility and the nginx logs, the values from Response time distribution appears much closer.

Do you understand that the time you measure server side doesn’t account for DNS resolution time, TCP connect time, TLS handshake time and any network overhead ?

That’s correct, but the comparison is with just another Java based load test utility.

And even without comparison the data computed on different sections of Gatling report itself are not adding up. or I’m reading it wrong.

Response Time Distribution chart so far has shown the believable figures. Or is it the chart doesn’t plot all the datapoints?

Our team is in the process of migrating our scripts to Gatling and I’m the point of contact for that.

For the runs, they found relative drop in the final throughput , I had the answer that it’s because of the effective rps which is high with Gatling run and hence the test not comparable with the test executed through old utility. we are planning to do a run with reduces rps and close it.

However I’m not in the position to convince them on the reported response time values. especially for the APIs which take no longer than a second and here recording Response time as high as 10 mins.

Please share your simulation.log file so we can check if there’s anything wrong with the calculations.

Then, have you considered that you’re saturating the injector host, or that you have some network issues?

How to share the zip file?

here is the aggregated values from one of the simulation logs.

(highlighted one is the txn in the last shared screenshot.)

This max count when I try to find in the “Response Time Distribution” graph is not available. the max value I see is 25 seconds. and not 294.

I mean this, the last point on the first graph 25,018 ms. instead of 293,953 ms

You can use any file sharing platform, even GDrive.

Sorry, but we need the original file, there’s nothing we can do with just images.